3D Modeling - Point clouds vs meshes

Three-dimensional modeling is far from a new technology, but the methods and devices used to make 3D models are rapidly developing and improving. There are quite a few different technologies available to anyone with the interest (and budget) for capturing accurate digital models of the physical world, e.g. laser scanners, structured light scanners, LiDAR sensors, photogrammetry, etc. Each of these technologies have their own benefits and limitations (usually in the form of their scanning range or the environment they can fit or operate in) but they all have the same endgame - to make a point cloud.

There’s lots to say about these technologies but, for today, we’ll be focusing on understanding their end result and the two most commonly used terms to describe the end result: Point Cloud and Mesh.

Point Clouds

Whether you’re using a laser scanner, structured light scanner, LiDAR sensor, or photogrammetry to make a digital model, you’re going to be dealing with point clouds. Point clouds are literally a grouping (or “cloud”) of millions of individual “points” in three-dimensional space that describe the object they’re representing. The position of each one of the millions of points represents the actual position of a real location on the scanned object. Oversimplifying a bit, these points basically just trace an outline of the shape and size of the object. The figures below should help reinforce the concept.

Point clouds are arguably the most important part of a three-dimensional model when it comes to forensics. That’s in large part because the point cloud is essentially the raw data, or measurements, taken by the laser scanner (or whatever other device is being used). The point cloud is essentially the framework for the model and it determines the accuracy of any measurements taken from the 3D model.

Meshes

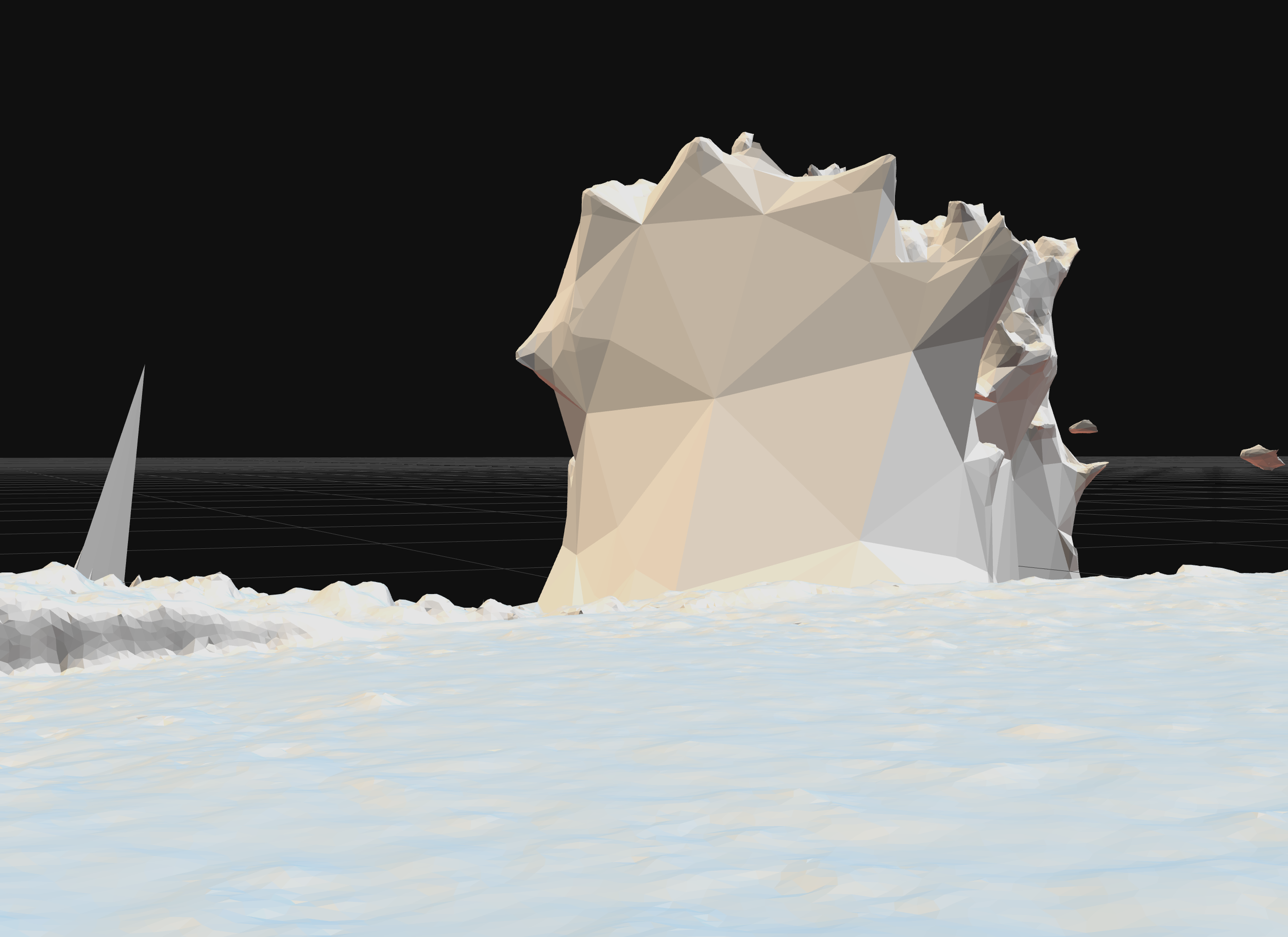

Point clouds form the outline of the object - Meshes connect the dots. Specifically, meshes connect the points to the other points around them using tiny, solid, triangles to fill the gaps between the points. You can think of a mesh as the skin that wraps around the point cloud - like draping a bed sheet over a birdcage.

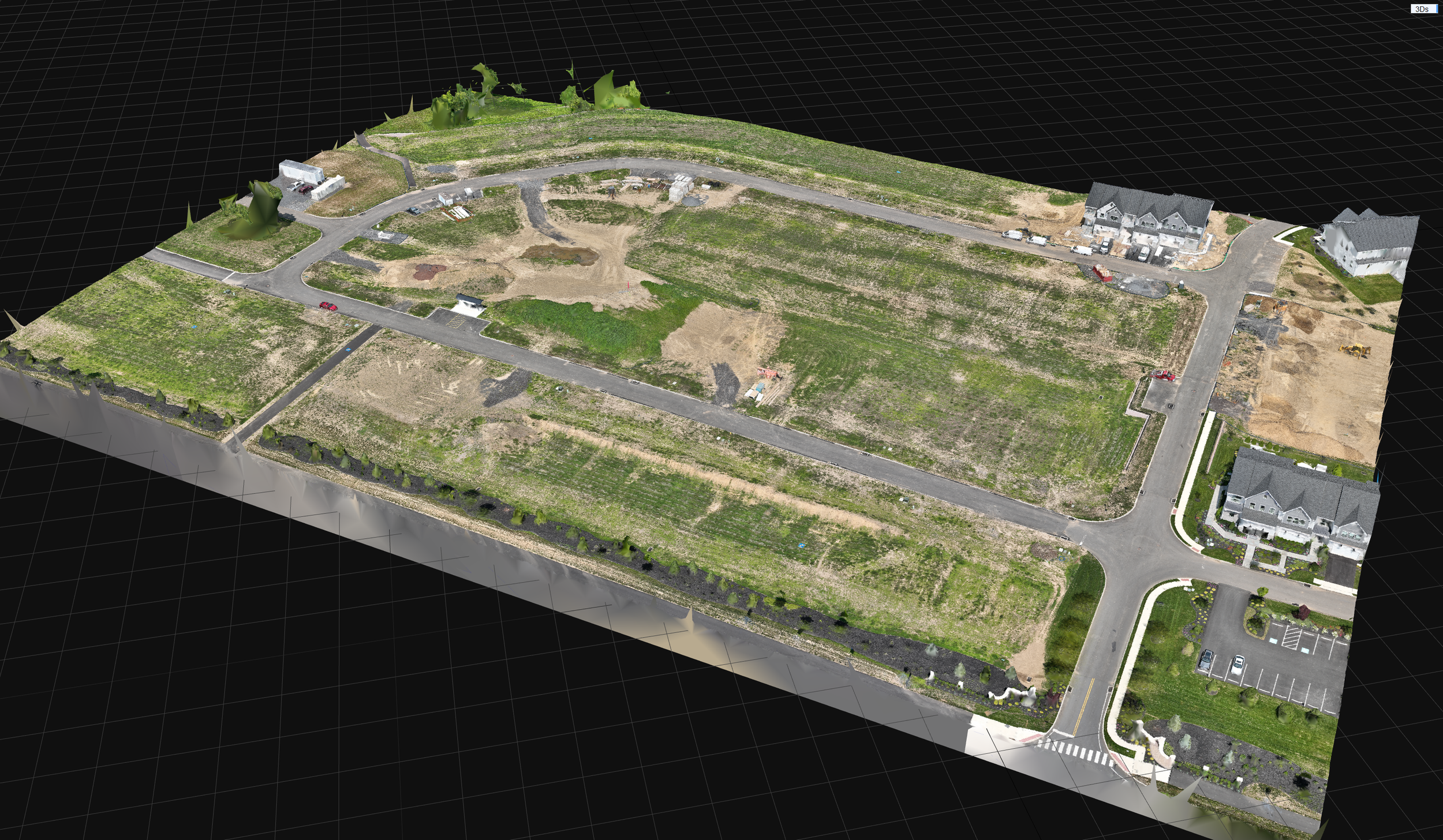

Let’s look at some more examples. The figure below shows a 3D model of a construction site made using photos from a drone (photogrammetry again). From a zoomed-out perspective, you can’t see most of the gaps between the individual points that form the point cloud. As a result, the point cloud and mesh don’t look much different.

Zooming in highlights the differences between point clouds and meshes. The figure below shows a dirt mound from the figure above (the one encircled by the road) in more detail and from a different perspective.

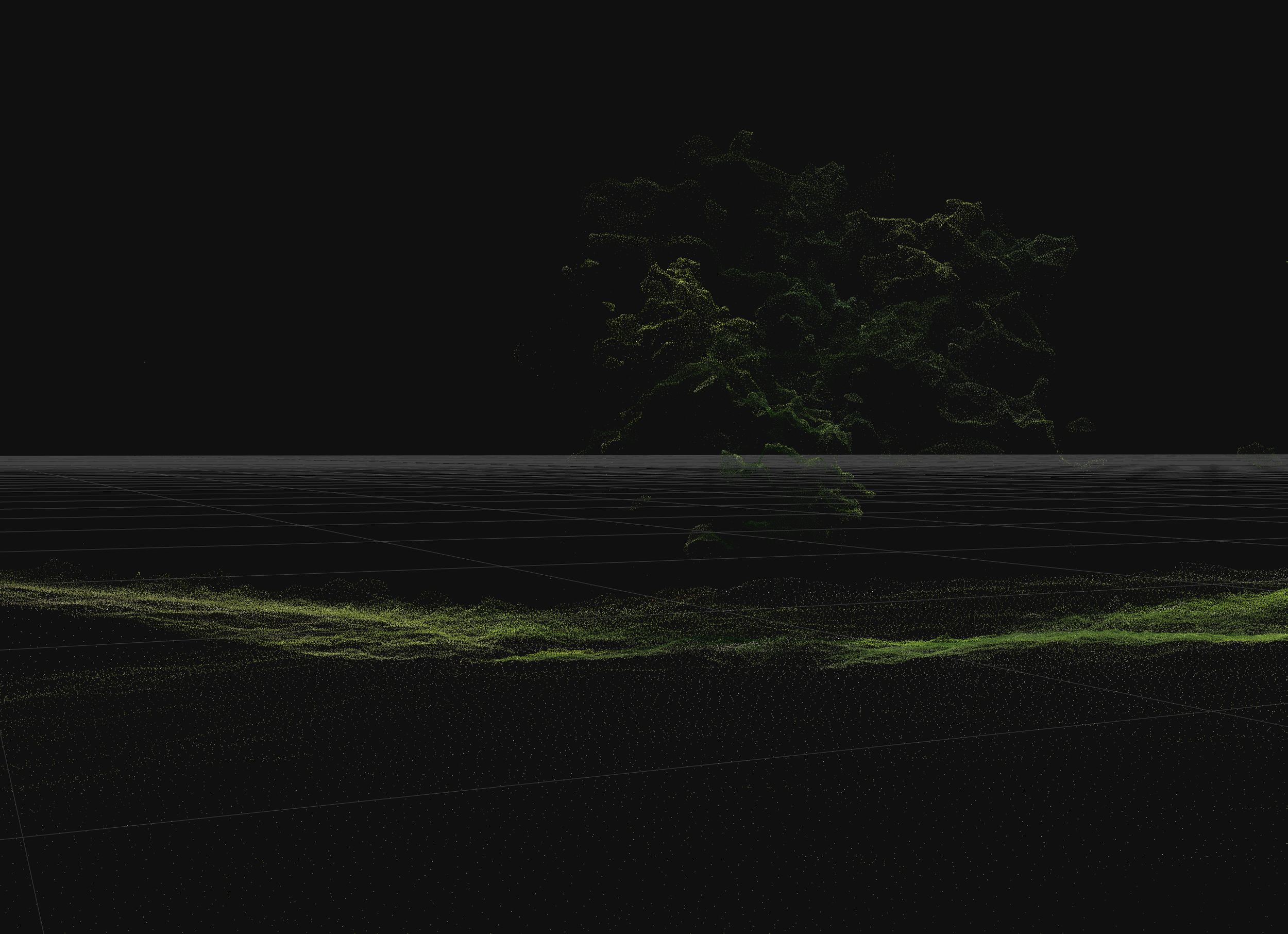

Let’s look at one more figure to really drive the point home. The figure below zooms in on a tree that was only partially captured by the drone photos (the tree is on the top left of the first figure from above). The leaves around the top of the tree were captured by the photos and are fairly well represented by the point cloud. However, the drone photos didn’t capture the lower portions of the tree - leaving large gaps in the point cloud. Nevertheless, the meshing process connected the dots and filled the large gaps in the point cloud, leading to a tree that looks more like a green blob.

Remember when I mentioned that point clouds are the most important part of the model for forensics? This example demonstrates why. The point cloud clearly shows large gaps in the data where no points were collected while the mesh covers up those gaps. Covering the gaps is desirable for a nice dense point cloud that accurately defines the shape, size, and contours of the object but it can also hide/obscure deficiencies in the model, resulting in a mesh that doesn’t accurately represent the shape, size, or contours of the object.

Final Thoughts

Today’s post is a 10,000ft view of 3D modeling and intentionally skips over important differences between the technologies used to make these models. For example, our first blog post discussed the importance of control points for establishing the accuracy and reliability of photogrammetric models made from drone images. That post nicely highlights why being able to use the equipment and make a model isn’t a substitute for understanding the theory behind the methodology and the limits of the technology.

We’ll be diving deeper into other 3D modeling technologies and use cases in future posts.